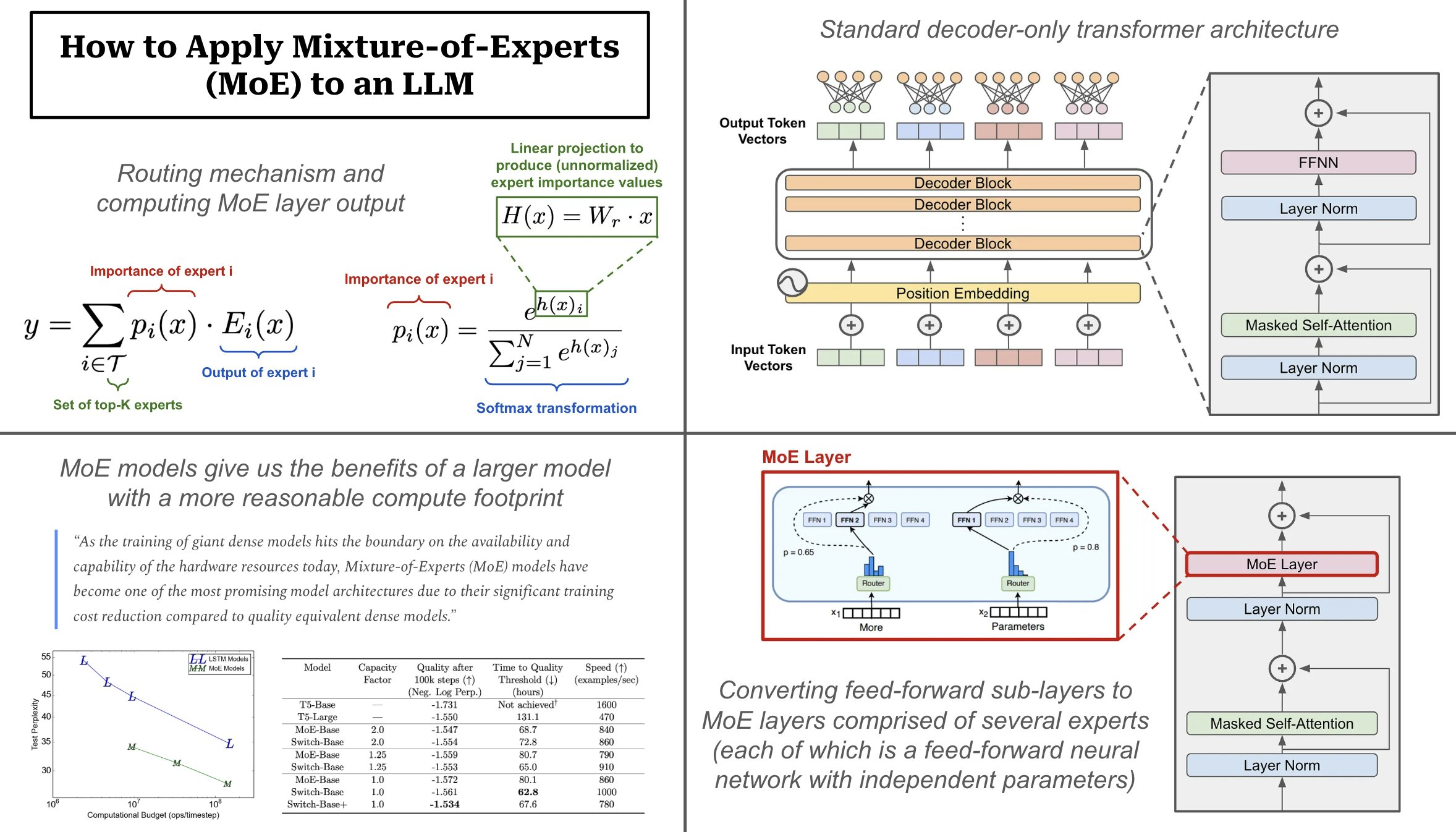

[Ed. note: This article comes from a Tweet first posted here. We’ve partnered with Cameron Wolfe to give his insights a wider audience, as we think they provide insight into various topics around GenAI.] Mixture of experts (MoE) has arisen as a new technique to improve LLM performance.

Full article